- formatting

- images

- links

- math

- code

- blockquotes

- external-services

•

•

•

•

•

•

-

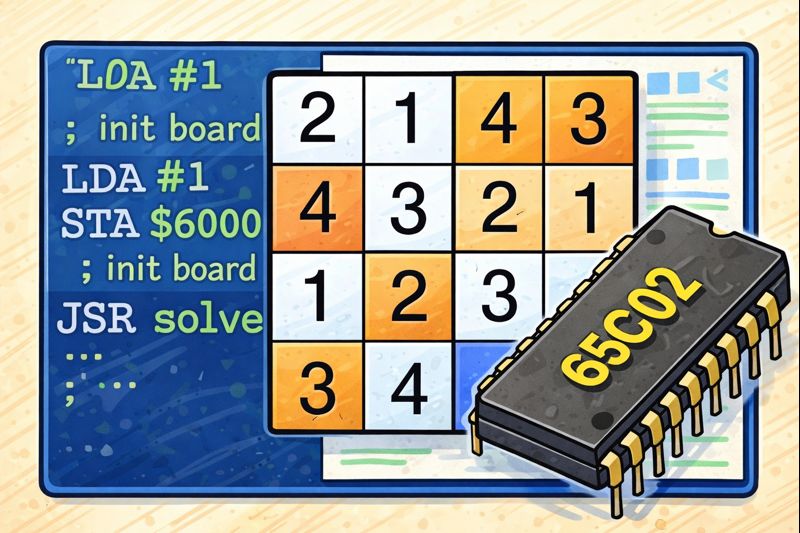

Solving a Mini Sudoku in 6502 Assembly

A deep dive into solving 4x4 mini-Sudokus using a recursive backtracking algorithm written entirely in 6502 assembly, including design decisions, memory layout, and emulator-based inspection.

-

The Hexadecimal Digit Canon Challenge

A combinatorial programming challenge set in base 16: define a digit-canonical form for hexadecimal numbers and compute the cumulative sum of these canonical values across rapidly growing digit ranges. Simple to state, but requiring careful counting and optimization at scale.

-

Short Notes: Equal Partitions, Products, and Decimal Structure

We study how splitting an integer into equal parts affects the maximum attainable product and how arithmetic properties of the optimum emerge. In particular, we relate a continuous optimization problem to the decimal structure of the resulting rational values.

-

Short Notes: On a Curious Prefix-Sum Problem

A deceptively simple recurrence leads to an unexpected challenge when computing its prefix sums. Solving it efficiently requires looking at the problem from a different angle.

-

Short Notes: Summing Non-Isolated Divisors Across All Subsets

A step-by-step combinatorial derivation of an efficient algorithm to compute S(n): the total sum of subset elements that divide another element in the same subset. The post shows how a brute-force exponential problem can be transformed into a fast method using number-theoretic structure and closed-form counting.