BitBully

One of the fastest and perfect-playing Connect-4 solvers around

BitBully is a high-performance, perfect-playing Connect-4 solver and analysis engine written in C++ with Python bindings. It’s designed for both developers and researchers who want to explore game-theoretic strategies or integrate a strong Connect-4 AI into their own projects.

🚀 Key Features

- Blazing Fast Solving: Uses MTD(f) and null-window search algorithms.

- Bitboard Engine: Board states are handled efficiently via low-level bitwise operations.

- Advanced Heuristics: Threat detection, move ordering, and transposition tables.

- Opening Databases: Covers all positions with up to 12 tokens, annotated with exact win/loss distances.

- Cross-Platform: Compatible with Linux, Windows, and macOS.

- Python API: Seamlessly integrates into Python projects via

bitbully_core(powered bypybind11). - Open Source: Available under the AGPL-3.0 license.

📦 Installation

Install the latest stable release from PyPI:

pip install bitbully

No compilation needed—pre-built wheels included!

🧠 Example Usage (Python)

import bitbully as bb

agent = bb.BitBully()

board = bb.Board()

while not board.is_game_over():

board.play(agent.best_move(board))

print(board)

print("Winner:", board.winner())

You can also solve boards defined as NumPy arrays, use opening books, and generate random game states. For more examples check out the docs or the GitHub repository·

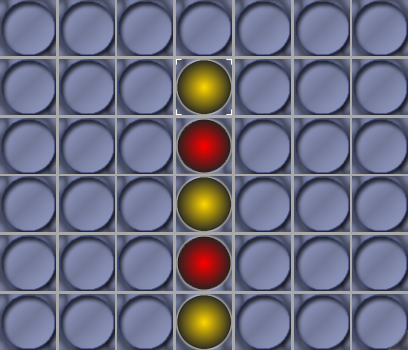

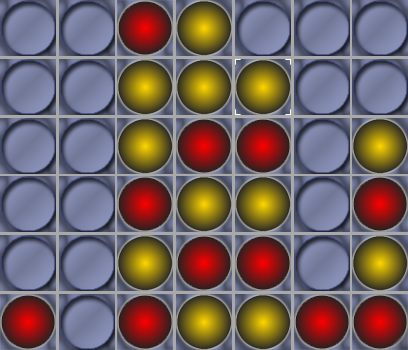

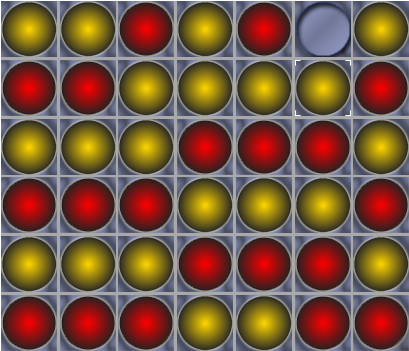

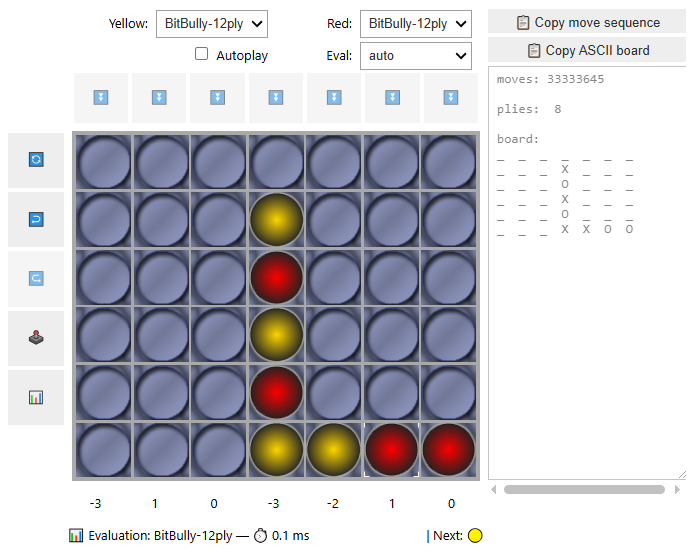

🎮 Play a Game of Connect-4 with a simple Jupyter Notebook Widget

BitBully includes an interactive Connect-4 widget for Jupyter built with ipywidgets + Matplotlib. GuiC4 renders a 6x7 board using image sprites, supports click-to-play or column buttons, provides undo/redo, can trigger a computer move using the BitBully engine (optionally with an opening book database), and shows win/draw popups. It’s intended for quick experimentation and demos inside notebooks (best with %matplotlib ipympl).

Jupyter Notebook: notebooks/game_widget.ipynb

📜 License

AGPL-3.0. View License

🙏 Acknowledgments

Inspired by the solvers of Pascal Pons and John Tromp.

Related Work

Literature

The application of machine learning to board games remains an active and challenging research area, particularly due to the complexity and strategic depth of games like Chess, Go, and Connect Four. Unlike humans who can intuitively recognize patterns, artificial agents require structured learning approaches, often supported by carefully engineered features or representations.

A milestone in this field was Tesauro’s TD-Gammon, which demonstrated that self-play combined with temporal difference learning (TDL) could lead to expert-level performance in backgammon. Inspired by this success, many studies attempted to apply TDL to other board games, but the outcomes were often mixed due to higher complexity and lack of domain knowledge (Thill, 2012; Thill et al., 2012).

Prior work on Connect Four showed that learning strong strategies through self-play alone is feasible, but only with a very rich feature representation and a large number of training games. One such approach used N-tuple systems in combination with TDL to approximate value functions. These systems produced high-quality agents capable of defeating even perfect-play opponents, all without incorporating handcrafted game-theoretic knowledge. The success was largely attributed to the expressiveness of the N-tuple representation and extensive training with millions of games (Thill, 2012; Thill et al., 2012).

Subsequent research introduced eligibility traces—including standard, resetting, and replacing variants—into these systems. Eligibility traces enhanced temporal credit assignment and significantly accelerated learning (by a factor of two) while improving asymptotic playing strength (Thill, 2015).

To further improve training efficiency, recent studies investigated online-adaptable learning rate algorithms, such as Incremental Delta-Bar-Delta (IDBD) and Temporal Coherence Learning (TCL). A novel variant using geometric step-size adaptation outperformed conventional methods, reducing the number of required training games by up to 75% in some cases. The most effective algorithms proved to be those that combined geometric learning rates with nonlinear value functions and eligibility traces. These methods brought the total training requirement for learning Connect Four down to just over 100,000 games, a 13× improvement over earlier baselines (Bagheri et al., 2016).

Finally, preliminary experiments also applied this enhanced learning framework to other strategic games, such as Dots-and-Boxes, showing the framework’s potential for broader generalization, though some unique domain-specific challenges were identified (Thill et al., 2014).

Connect-4 Game Playing Framework (C4GPF)

The C4GPF is a Java-based framework for training, evaluating, and interacting with Connect Four agents. It features a GUI and supports various agent types, including:

- Perfect-play Minimax agent with database and transposition table support.

- Reinforcement Learning agent using n-tuple systems and TD-learning with eligibility traces.

- Monte Carlo Tree Search (MCTS) agent.

- RL-Minimax hybrid, combining tree search with learned state evaluations.

Key capabilities include:

- Animated or step-by-step agent matches.

- Benchmarking and head-to-head competitions.

- Visualization and editing of n-tuple lookup tables.

- Support for adaptive step-size algorithms (e.g., IDBD, TCL, AutoStep).

The framework is extensible and designed for research and teaching.

🔗 Repository: Connect-4 Game Playing Framework (C4GPF)

General Board Game Framework (GBG)

GBG is a flexible Java-based framework for general board game (GBG) learning and playing. Designed for research and education, it allows users to implement new board games or AI agents once and run them across all supported components.

Key features:

- Supports 1-player, 2-player, and n-player board games.

- Comes with a variety of built-in AI agents, including reinforcement learning and tree-based strategies.

- Standardized interfaces and abstract classes make it easy to plug in new games or agents.

- Enables fair competitions and benchmarking between agents across multiple games.

- Suitable for both classroom use and research projects.

The framework includes documentation, a GUI, and a technical report explaining its architecture.

🔗 Repository: General Board Game Framework (GBG)

References

2016

- IEEE Trans. GamesOnline Adaptable Learning Rates for the Game Connect-4IEEE Transactions on Computational Intelligence and AI in Games, 2016

2015

2014

- CIGTemporal Difference Learning with Eligibility Traces for the Game Connect-4In CIG’2014, International Conference on Computational Intelligence in Games, Dortmund, 2014

2012

- PPSNReinforcement learning with n-tuples on the game Connect-4In PPSN’2012: 12th International Conference on Parallel Problem Solving From Nature, Taormina, 2012